T-Test for Model Performance Evaluation

Introductions to t-test for machine learning

Published on January 05, 2022 by Hongyi WANG

statistical evaluations t-test deep learning

3 min READ

Recently one of my reviewers asked me to conduct a t-test on my model to verify if it is statistically different from other sota methods. In this blog, I will briefly introduce t-test and tell you how to use it to evaluate your deep-learning models.

What is T-Test?

So basically t-test is used to find out whether two sets of samples have obvious differences. Since many so-called ‘sota’ methods only have very limited improvements compared with previous works, it is necessary for them to use t-test to prove that their result is not just accidentally better on average. In this article, I will not dig into the principle of t-test, but will only tell you how to use it.

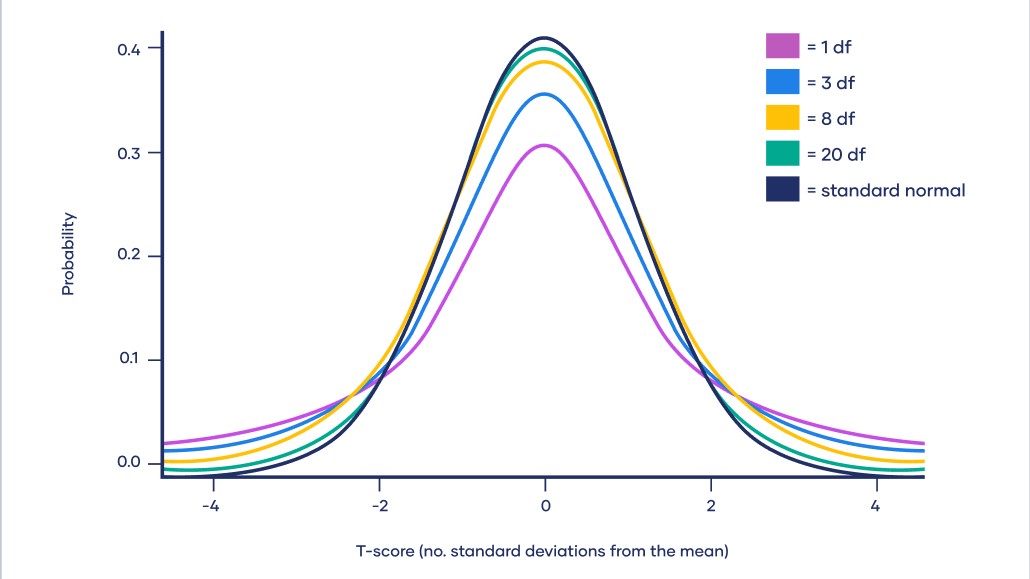

It should be noted that t-test use the assumption that the test samples all correspond to normal distribution. This is certainly not true for many deep-learning test results. However, as long as your results do not show obvious bias (this can only happen when you have too few samples and there exists a lot of noise), t-test is still suitable for reference.

Actually, t-test is seldom used in Computer Science and most of my friends have never heard of it. t-test is often used in medical or financial fields, so if you have any questions about t-test, ask someone in medical or finance school.

Three kinds of T-Test

Basically, there are 3 types of t-test.

- One-Sample t-test.

- Independent-Samples t-test.

- Paired t-test.

If you want to compare your method with other methods on the same test set, paired t-test is what you should use.

How to compute the T-Test result?

There are many existing tools for you to do that. R language, SPSS, and even Microsoft Excel. However, if you wish to use t-test for your experiment’s statistical analysis, I will probably recommend IBM SPSS for you. (You can get a 30-day trial from IBM each year. )

To use SPSS for paired t-test, you have to first import the test result of each case into SPSS. For your method, you list all the results (such as Dice for segmentation) in the same column. For the other method, list the results in another column with the exact same order. Some datasets may contain several classes, and if you would like to analyze each class separately, you should record the result of each class in different columns.

Then, Click ‘Analyze -> Mean Comparison -> Paired T-Test’ in the menu bar.

Then there will be a pop-up window. Select the two columns that you want to compare, then process.

SPSS will pop up a detailed table for your results. It may be a little confusing to understand at first. But for you, a CS student, you can directly go to the table with ‘p-value’ in the last column. If you have a p-value < 0.05, then it indicates that your result has a statistical difference from the other method.

You may also notice that there are actually two ‘p-value’s in the table, which are one-tailed p-value and two-tailed p-value. The one-tailed p-value is the one you should take as a reference only when you know that your method is better than the other one. However, if you are not so sure about this, you should use a two-tailed p-value because this one is computed from both directions.

To use t-test in Python, you can import the scipy library and use scipy.stats.ttest_rel() to get the results

What does paired T-Test tell us?

Paired t-test is actually based on the subtractions of two methods’ performance on the same case. If we have a p-value > 0.05, then maybe it is only a coincidence that your method is better than the other one. For multi-class datasets, this could also happen because different methods have special advantages in processing different classes.